The 21st century is going to be a world of artificial intelligence (AI), whether we’re ready for it or not. And we’re probably not, especially not when it comes to health care.

Let's start with two tweets:

Chrissy Farr: "My favorite Friday quote: @Farzad_MD citing a peer at Mass General: "Any doctor that can be replaced by a computer should be.""

Trisha Greenhalgh: "I predict the very last doctor to be replaced by a robot will be the GP. Almost none of our work is algorithmic."

These sentiments are widely shared by physicians. They view what they do as uniquely human, and are pretty proud of it — although some now allow that AI might be able to augment them. Someday.

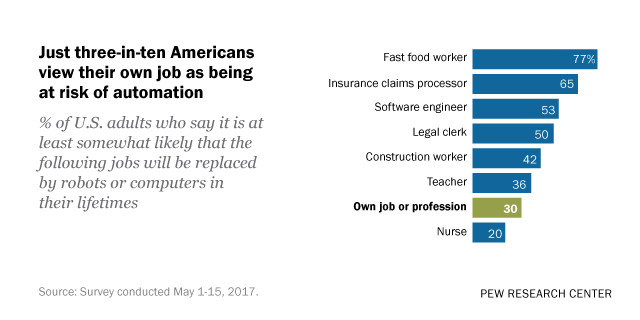

However, feeling that their job is not likely to be replaced by AI (or other automation) is not unique to physicians. Consider the following from a Pew Research Center survey:

In other words: too bad for those fast food workers or those overpaid software engineers, my job is safe. And not just this year or next year, but for the rest of my life. There likely is some wishful thinking going on here, and that may apply to physicians as well.

Still, 79% of respondents believed that within 20 years doctors will rely on computers to diagnose and treat most diseases. The real question is the extent to which a human doctor will still be involved.

This is hard for physicians to accept. Caroline Poplin, MD, JD, FACP, argues “Medicine is Not Manufacturing.” She laments that: “Even as Western medicine has become more scientific over the centuries, the central role of the physician-patient relationship, with the interest of the patient being paramount, has remained the same — until recently.”

In recent years, she believes, health care has become more of a business, and concludes that the the unique thing about medicine is its soul, “the essential element that has given patients comfort and relief for thousands of years and does so even today.”

Take that, computers.

The fact is, like it or not, that health care is a business, in which lots of people and organizations make a lot of money. It’s hard to walk into a hospital, or a surgery center, or even a doctor’s office, and not see that they are manufacturing operations.

They’re often not very efficient, mind you, and as much as they say they are all about the patient, that is often hard to believe.

That is not to say that there are not very empathetic, caring people in health care — many of them physicians — but let’s not confuse that with the practice of medicine. Not anymore.

When physicians had little idea of underlying disease states and even less ability to do anything about them, that confident bedside manner helped many patients, but as we apply more science to diagnosing and treating patients, we should need it less.

What is it, exactly, that we’re trying to preserve? Physicians treat patients based on their experience with previous patients, the training they got, the research they remember, the imperfect and intermittent data they have, and their vaunted intuition. Those are a lot of variables, potentially leaving wide gaps in what they can bring to bear upon the care for a specific patient.

Human doctors do the best they can, in most cases, but they do make mistakes, they do order care that is unnecessary at best and inappropriate or dangerous at worst, and they don’t always agree with other doctors about patients.

An AI, on the other hand, can bring to bear analysis of huge datasets, the depth and breadth of all existing research, and an infallible memory. Plus, it doesn’t have bad days, isn’t going to be tired, and doesn’t go on vacations.

It might even develop a bedside manner.

The odds are that your human doctor is almost certainly not the “best” in the world, if we could ever figure out who that was, but the best AI doc could treat an unlimited number of patients.

One of the most interesting recent AI developments comes from a game. Google’s Deepmind program AlphaGo Zero learned the fiendish difficult game Go — in three days, without any human instruction. It didn’t look at games played by humans, it didn’t have human Go experts programming it, it didn’t even play humans.

Instead, it was fed the rules, then played itself. Endlessly. Millions of games. It made stupid mistakes initially, but when all was said and done after the three days it was at a level no human ever has been. It learned many of the intricate strategies that humans have figured out over the centuries, as well as ones that Go experts called “amazing, strange, alien.”

“They’re how I imagine games from far in the future,” Shi Yue, a top Go player from China said, or, more colorfully, which Go enthusiast Jonathan Hop describes as “Go from an alternate dimension.”

Imagine this for health care.

We’ll start with more data. A lot more data. Not just finally breaking down all those ridiculous silos, but from more ongoing monitoring through wearables and other devices, helping establish our base health and giving more early warning of when things are getting off track.

AI will come up with ways of diagnosing and treating that we’ve already discovered — along with ones that are “from far in the future.” That’s a good thing, and we should welcome it.

Dr. Bryan Vartabedian, for one, thinks that AI will force doctors to rethink what they do. Computers make information widely available, imaging more precise, pattern recognition more possible. He points out:

The end result is a paradox: We want the precision and specificity of the machine yet we want to believe that we can still do it all with our hands and eyes and ears.

We probably can’t. So we need to start redefining what the human doctor of the 21st century will do.

Dr. Vartabedian thinks the key question may boil down to “What can a human do that a machine can’t?” That is a question whose answer will be continually evolving — as will the role of physicians.

In a separate article, Dr. Vartabedian hints at an answer:

My work is about translation and connection… I help them negotiate the transition between life with a chronic condition and the healthy development that can create some semblance of a normal life… It’s a far cry from the work of a scientist. And I’m good with that.

The goal of AI in health care isn’t to get rid of human doctors, but it shouldn’t be to preserve them either. The goal should be to do what is best for patients, for us.

There will be a role for physicians in the future. Probably. But there will also be one for AI. It won’t be the same as human doctors, and that’s OK.

No comments:

Post a Comment